GPT-4, Stable Diffusion, and Beyond: How Generative AI Will Shape Human Society

Contents

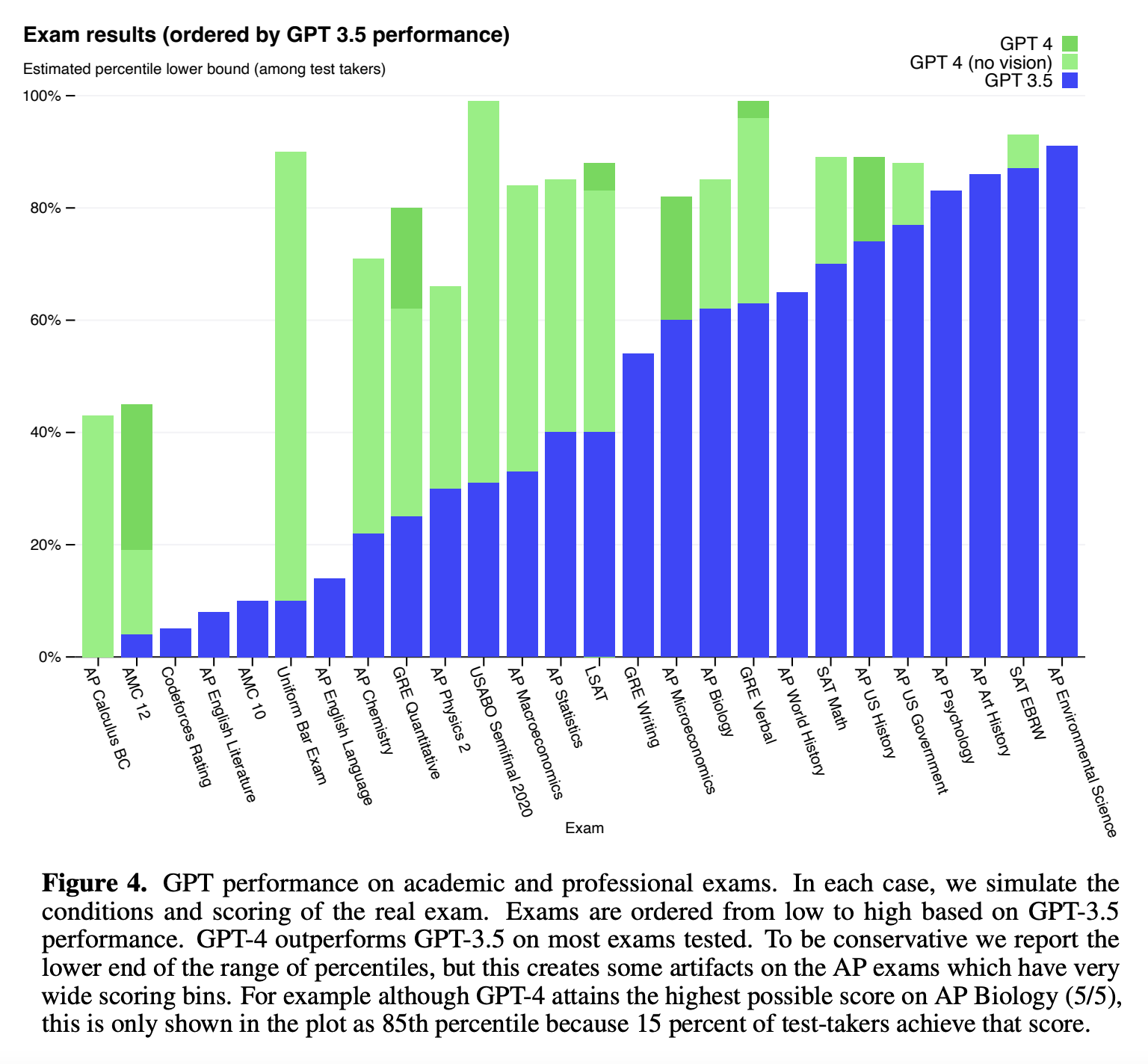

In 2020, I wrote about GPT-3 model. Late last year, OpenAI released ChatGPT which was based on GPT-3 but trained using Reinforcement Learning from Human Feedback (RLHF). And now GPT-4 has been released. It has only been out for a few days, but it is already seeing incredible applications such as creating office documents, turning sketches into functional apps, creating personal tutors, and more.

And not just GPT-based models, StableDiffusion and Dall-E are also pushing the boundaries of art, creating stunning visuals from mere textual descriptions. Professional ad agencies, too, are exploring how to use AI, as seen in this Coca-Cola ad and this Crocs ad which apparently took only 28 minutes to create from scratch.

Suddenly, the pace of advancements in AI has accelerated from a sluggish seep to a torrent, some might say it is not something we can control anymore. The dread of hyper-intelligence has long been a staple of our fears, for if an AI were to improve itself recursively, it would have no perceivable ceiling to the extent of its intelligence. Yet, such concerns remain speculations, given our limited understanding of AI’s internal workings (more on this below). We tend to project our apprehensions and anthropocentric tendencies onto AI and assume that it would act as a sentient being would. Rather than fretting over future possibilities, we should focus on the present: AI capabilities are already advanced enough to disrupt society.

Changing Realities: The Societal Impact of Generative AI

Human society has always evolved in a dynamic equilibrium with the technology it wields. Every major technological revolution has been accompanied by a sociological inflection point, as society adjusts not just to the novelty of new inventions but revises its implicit assumptions, norms and behaviors.

The cognitive revolution (language & arts) that laid the foundation for civilization by bringing people together, also gave us myths, hero worship, and kings. It took us millennia for society at large to loosen the shackles of such institutions. Similarly, the invention of the printing press brought about a new era of mass communication, but also led to the spread of propaganda and the manipulation of public opinion. With the rise of radio and television came new forms of propaganda and disinformation. With the internet and social media, we still haven’t figured out coping mechanisms for fake news and large-scale manipulation of public opinion.

Few models to rule them all

Generative AI brings with it all of the above risks, but on a scale and reach never imagined before. Almost all large scale models are derived from very few foundational models, further centralizing the risks and power. When I wrote about GPT-3, three things caught my attention:

- Effectiveness of scale

- Languages can be a model of the physical world

- Emergent behaviors leading to Zero to few shot learning

However, I only thought of GPT-3 as a precursor for things to come. But recent products like ChatGPT that are built on GPT-3 have shown that GPT-3 and similar Large Language Models (LLM) are already good enough.

And good enough models are easy to use, so their adoption will only continue to grow. As people become more familiar with these tools, they will begin to trust its judgment automatically. In place of a circumspect, potentially biased human, we will have an infallible, impartial arbitrator telling us what is true. But how can we know how it made its decision?

“In place of a circumspect, potentially biased human, we will have an infallible, impartial arbitrator telling us what is true”

Epistemology of Large Language Models: Defying Human Comprehension

The performance of an LLM comes from their emergent behaviors rather than explicit instruction. For example, when OpenAI scaled GPT-3 to 175 billion parameters from 1.5 billion in GPT-2, they observed few-shot learning, an emergent property that was neither specifically trained for nor anticipated to arise. Even in the past researchers have warned that “despite their deployment into the real world, these models are very much research prototypes that are poorly understood”. It is just now more of the same.

Furthermore, the resources required for training such large-scale models keep it out of reach for many; commercial incentives lend very few reasons for companies to make their models transparent or to attend to any of these social externalities arising from their obscurity. In essence, our attempts to grasp the nature of these models resemble those of blind men attempting to describe an elephant.

Cultural Norms Redefined

As mentioned before, large-scale models are largely homogeneous, derived from very few foundation models; training data is often lopsided and only represents a tiny percentage of languages. The embedded social and political factors in these models lead to the entrenchment of a pre-dominant value system and undermine plurality. The prevalence of homogeneous LLMs in society can lead to cultural conformity where individual expression and differences are lost on several levels ranging from the individual to society.

In the past, LLMs have also produced inaccurate or harmful outputs. While these have been somewhat mitigated through fine-tuning, the notion that a mere handful of individuals could define the parameters of morality with any confidence is problematic. The obscure and hermetic nature of these large-scale models only amplifies our concerns. Chomsky in his NY Times piece argues that fine-tuning their ability to be original and opinionated. Thus, engineers “sacrificed creativity for a kind of amorality”

The Future of Work in an AI Dominated World

There is always a concern that AI systems will replace human workers in a variety of industries, leading to job losses and economic disruption. Fears of job losses due to technology changes are not new. In the early 19th century, textile workers in England formed the Luddite movement to protest against the introduction of new textile technologies that threatened their jobs. Their paranoia turned out to be misguided as new technologies led to industry growth, creating new jobs and increasing productivity.

The increasing ability of AI to outperform humans in certain tasks has raised concerns about AI replacing human workforce. However, it is more likely that AI will operate in a more symbiotic relationship with the human workforce by enhancing and empowering human expertise rather than replacing them. For example, chess engines have long possessed the capabilities to defeat human players but now they are used to assist Grandmasters in their preparations. However, it is also important to acknowledge that while new technologies can bring in new avenues of employment there will be displacements in existing employment patterns. We as individuals and society, need to be prepared for these changes and adapt accordingly.

The Intersection Of AI and Art

With the advent of DALL-E and Stable Diffusion, AI-generated art has now been thrust into the mainstream, and as such, concerns have arisen over the potential for plagiarism and disrespect for intellectual property rights. This is like the ancient paradox of “Ship of Theseus” - when does art transcend beyond its origins to become something entirely new? How do you distinguish and attribute ownership to your own contributions.

Irrespective of how we address the plagiarism problem, I don’t see AI-generated art diminishing the need or impact of artists. Art in its purest form serves as a counterpoint to the cultural norms of contemporary society. If everyone is producing StableDiffusion art, certain predictable patterns begin to emerge that can feel soulless. The same can be said of ChatGPT or any AI-derived work. Folks have already noted that in StableDiffusion “the default mode of these images is to shine and sparkle, as if illuminated from within”. True art will find voice to shine through these mimics. On the other hand, mainstream artists who adopt derivative, orthodox forms of expression may become obsolete and be replaced by a new generation of artists who integrate technology into their art.

Art in its purest form serves as a counterpoint to the cultural norms of contemporary society.

Balancing Progress with Needs of Society and Safety

In order to tackle the potential hazards of AI, we have to channel our innate ability to adapt. As much as we’d like to think we’re in charge of the progress of AI, we can’t possibly anticipate all the potential consequences and risks that come with AI and expect to control it with a set of rules and regulations. The genie is out of the bottle, and companies large and small will embrace AI in one way or another to keep up with the growing competition. Companies that embrace AI will have a distinct advantage over those that are slow to adopt it. Therefore, it is imperative that we approach the development and integration of AI with humility, acknowledging the complexity and unpredictability of the technology, and actively seeking to examine, understand and manage its potential impact on society and individual lives. Companies should also build AI systems to express doubts and train users regarding the error margins

Author tmzh

LastMod 2023-03-15