Introduction

Large Language Models (LLMs) are good at generating coherent text, but they have few inherent limitations:

- Hallucinations: They learn and generate information in terms of likelihood and may produce information that is not grounded in facts

- Knowledge Cutoff: LLMs are trained on a fixed dataset and do not have access to real-time information or the ability to perform complex tasks like web browsing or executing code.

- Abstraction and Reasoning: LLMs may struggle with abstract reasoning and complex tasks that require logical steps or mathematical operations. Their output is not precise enough for tasks with fixed rule-sets w/o interfacing with external tools

There are two ways to address these limitations:

- Retrieval Augmented Generation (RAG)

- Function Calling

This post focuses on the latter.

What is Function Calling?

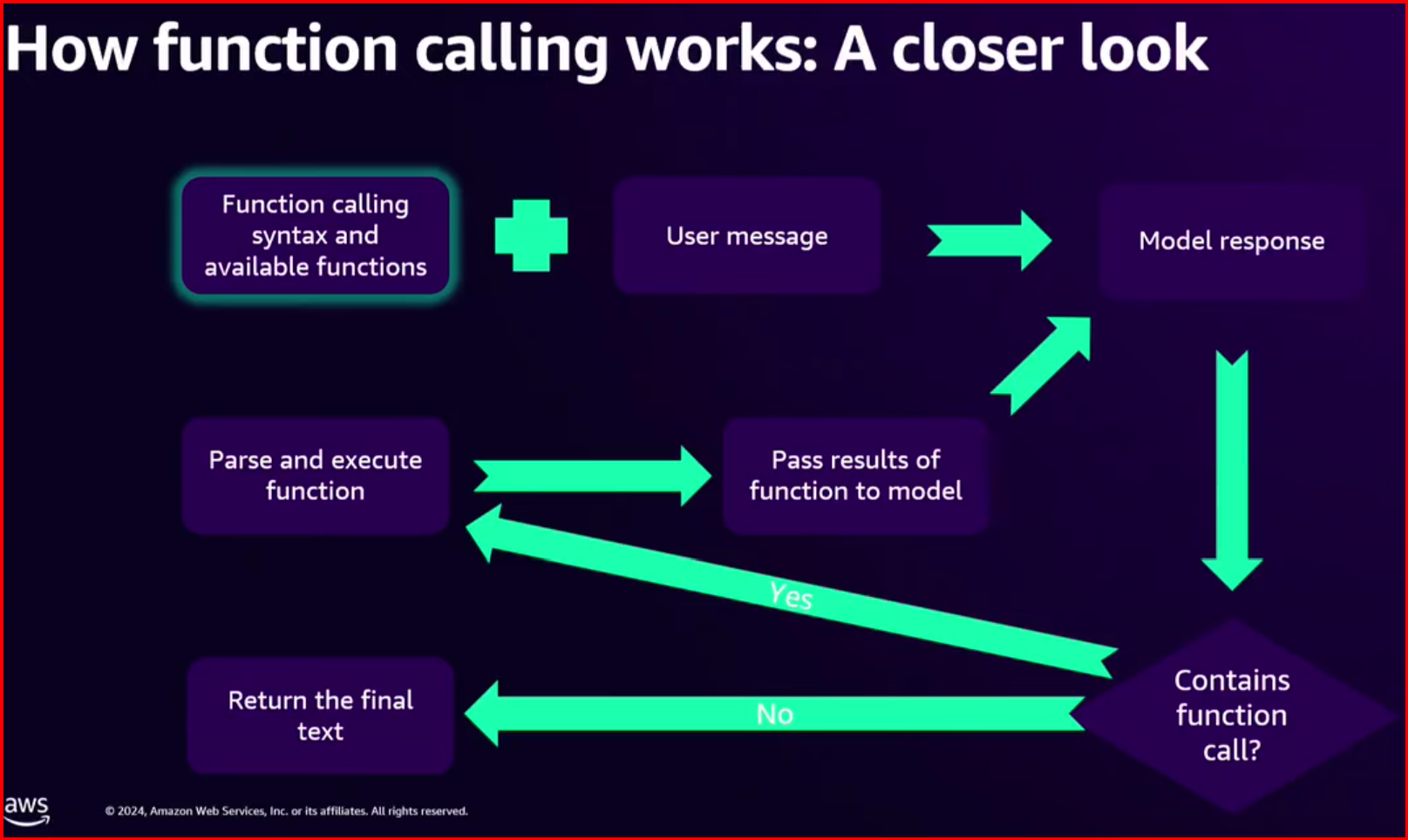

Function Calling enables LLMs to interact with external tools or APIs, thereby supplementing their knowledge and capabilities. The process is illustrated in the diagram below:

This approach helps overcome the limitations of knowledge cutoff and abstract reasoning by allowing LLMs to leverage external knowledge sources or tools. Although the function specifications can be passed as part of the prompt, it’s more effective to use an internalized template known by the model.

Function Calling As A Natural Language Interface

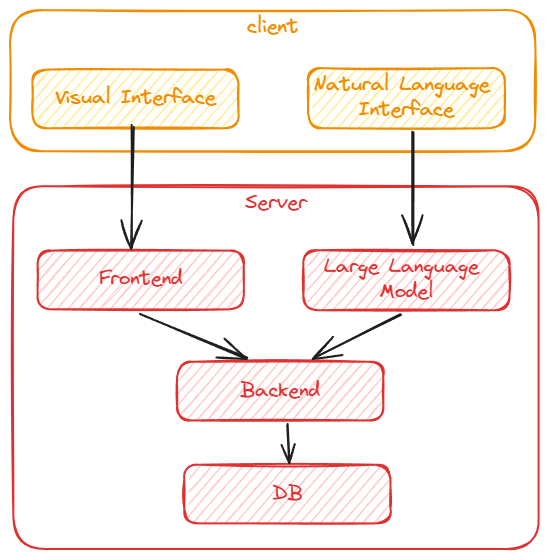

Function calling is not limited to simple tasks like calculator operations or weather API queries. It can be used to create an alternative, intuitive natural language interfaces for existing applications. This eliminates the need for complex UIs, as users can interact with the application using plain English.

Example: TMDB Movie Explorer

I have implemented a simple Flask application both with traditional UI and a Natural Language Interface that uses the function calling mechanism. The traditional UI of the application allows users to query movies by cast, genre, or title, whereas the Natural Language Interface allows users to ask in natural language

Chain of Thought Reasoning

To handle user queries, we employ a chain of thought (CoT) reasoning approach. This involves:

- Analyzing the User’s Request: Determine the intent behind the query.

- Generating a Reasoning Chain: Outline the logical steps required to gather the necessary information.

- Identifying Relevant Functions: Recognize which functions and the order in which they need to be called to fulfill the request

| |

For example, for the query “List comedy movies with Tom Cruise in it,” the generated reasoning chain might be:

- Search for the person ID of Tom Cruise using the

search_personfunction. - Search for the genre ID of comedy using the

search_genrefunction. - Call the

discover_moviefunction with the person ID and genre ID to find comedy movies that Tom Cruise has been in.

Tool Definitions

Tools are described using JSON Schema and passed to the model in the prompt. Here’s an example of tool definitions:

| |

We pass this message structure to the LLM to generate a reasoning chain. Here we are using llama3-groq-70b-8192-tool-use-preview model from groq which is 70B parameter model that is fine-tuned for tool use.

| |

Here each tool is a python function like below which calls TMDB API to retrieve the data.

| |

Here is an example of the reasoning chain generated for the query “List comedy movies with Tom Cruise in it”

| |

Handling Function Calls

The model returns a response containing tool calls. We extract the function name and arguments from the response and execute the function. The function’s response is then added to the conversation history.

| |

Now the conversation history would be like:

| |

Recursive Function Calls

We recursively call functions recommended by the LLM until we reach the final function call. The final discover_movie call consolidates all the gathered parameters and data into a single request that retrieves the ultimate list of movies relevant to the user’s inquiry

| |

Lessons learnt and Caveats

Keep in mind that the limitation and the perks of prompt engineering applies:

- Simplicity: Keep the function calls simple and concise. The more complex the function calls, the more likely it is that the model will make mistakes.

- Parameter Validation: Function parameter validation is not handled by the model. It is up to the developer to ensure that the parameters are valid.

- Guardrails: Establish Guardrails to prevent the model from calling functions that it should not call

Remember that the model is just one component of the system. The effectiveness and safety of the model depend on how it is used and integrated into the broader context. It is the developer’s responsibility to tailor safety measures to their specific use case and ensure that the model’s outputs are safe and appropriate for the context.

The above code along with a working app is available in HF Spaces.